a16z: The new era of "Pixar", how will AI integrate movies and games?

author:Jonathan Lai

Compiled by: Xiaobai Navigation coderworld

Over the past century, technological change has given rise to many of our favorite stories. In the 1930s, for example, Disney inventedMultiplane Camera, and for the first time produced full-color animation with synchronized sound. This technological breakthrough led to the creation of the groundbreaking animated film Snow White and the Seven Dwarfs.”

In the 1940s, Marvel and DC Comics rose to prominence, known as the "Golden Age of Comics", thanks toFour-color rotary printing pressThe widespread use of offset printing technology has enabled comics to be printed on a large scale. Limitations of the technology - low resolution, limited color tonesXiaobai Navigationrange, dot-matrix printing on cheap newsprint – resulting in the iconic “pulp” look we still recognise today.

Likewise, Pixar was uniquely positioned in the 1980s to take advantage of new technology platforms—computers and 3D graphics. Co-founder Edwin Catmull was an early researcher at NYIT’s Computer Graphics Lab and Lucasfilm, pioneering basic CGI concepts and later delivering the first fully computer-generated animated feature, Toy Story. Pixar’s Graphics Rendering Suite Renderman So far, it has been applied to more than 500 Movie.

With each technological wave, what started as an early prototype of a novelty evolved into a new format for deep storytelling, led by generations of new creators.Today, we believe that the next Pixar is about to be born. Generative AI (Generative AI) is driving a fundamental shift in creative storytelling, empowering a new generation of human creators to tell stories in entirely new ways.

Specifically, we believe that the Pixar of the next century will not be born through traditional movies or animation, but through interactive video.This new storytelling format will blur the lines between video games and TV/film - merging deep storytelling with audience agency and "game play," opening up a massive new market.

Games: The cutting edge of modern storytelling

There are two major waves emerging today that could accelerate the formation of a new generation of storytelling companies:

-

Consumer shift towards interactive media (as opposed to linear/passive media i.e. TV/movies)

-

Technological advances driven by generative AI

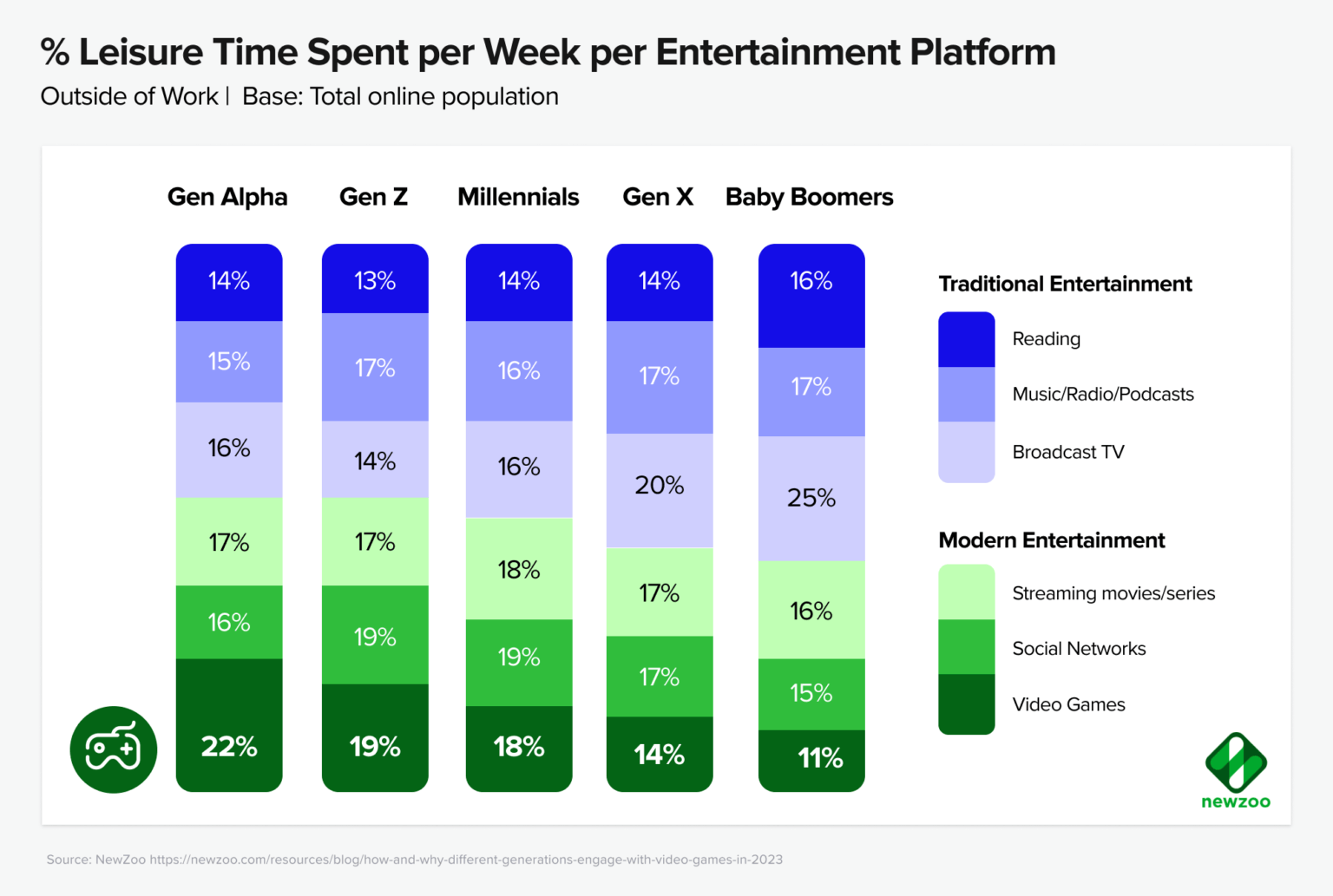

Over the past 30 years, we have seen a continued shift in consumers, with games and interactive media becoming more popular with each generation. For Gen Z and younger generations, games are now the preferred way to spend their free time.OvercameTV and movies. In 2019, Netflix CEO Reed Hastings wrote in a letter:Letter to shareholders“We compete with Fortnite (and often lose to it) more than HBO,” said Steve Jobs, CEO of ESPN. “For most households, the question is ‘what are we playing’ rather than ‘what are we watching’.

While television, movies, and books still tell compelling stories, many of the most innovative and successful new stories are now being told in games. Take Harry Potter. The open-world role-playing game Hogwarts Legacy gives players an unprecedented level of immersion in the experience of being a new student at Hogwarts. This game is coming to 2023.Bestsellers, with revenue exceeding $1 billion, surpassing all the Harry Potter films except the last one, Harry Potter and the Deathly Hallows: Part 2.$1.03 billion).

Gaming intellectual property (IP) has also seen huge success in TV and film adaptations recently. Naughty Dog’s The Last of Us became HBO Max’s most-watched series in 2023, averaging 32 million viewers per episode. The Super Mario Bros. movie created the biggest opening for an animated film worldwide with $1.4 billion. In addition,Highly acclaimed"Fallout" series, Paramount's "Halo》series, Tom Holland's"tomb Raider"Movies, Michael Bay'sSkibidi ToiletMovies – and lots of them.

A key reason interactive media is so powerful is that active participation helps build a sense of intimacy with a story or universe. An hour of playing a game can provide far greater concentration than an hour of passively watching TV. Many games are also social, with multiplayer mechanics baked into their core design. The most memorable stories are often the ones we create and share with friends and family.

Audiences continue to interact with intellectual property across multiple mediums (watching, playing, creating, sharing), which makes the stories not just entertainment, but also part of a person’s identity. The magical transformation occurs when a person grows from a simple “Harry Potter viewer” to a “loyal Potter fan”, the latter of which is more lasting, building an identity and multi-person experience around what was once a single-person activity.Community.

Overall, while the greatest stories in our history have been told in linear media, looking ahead, games and interactive media will be where future stories will be told—and therefore where we believe the most important storytelling companies of the next century will be born.

Interactive video: the combination of narrative and games

Given the dominance of gaming in culture, we believe the next Pixar will emerge through a media format that combines storytelling with gaming. One format we see great potential for is interactive video.

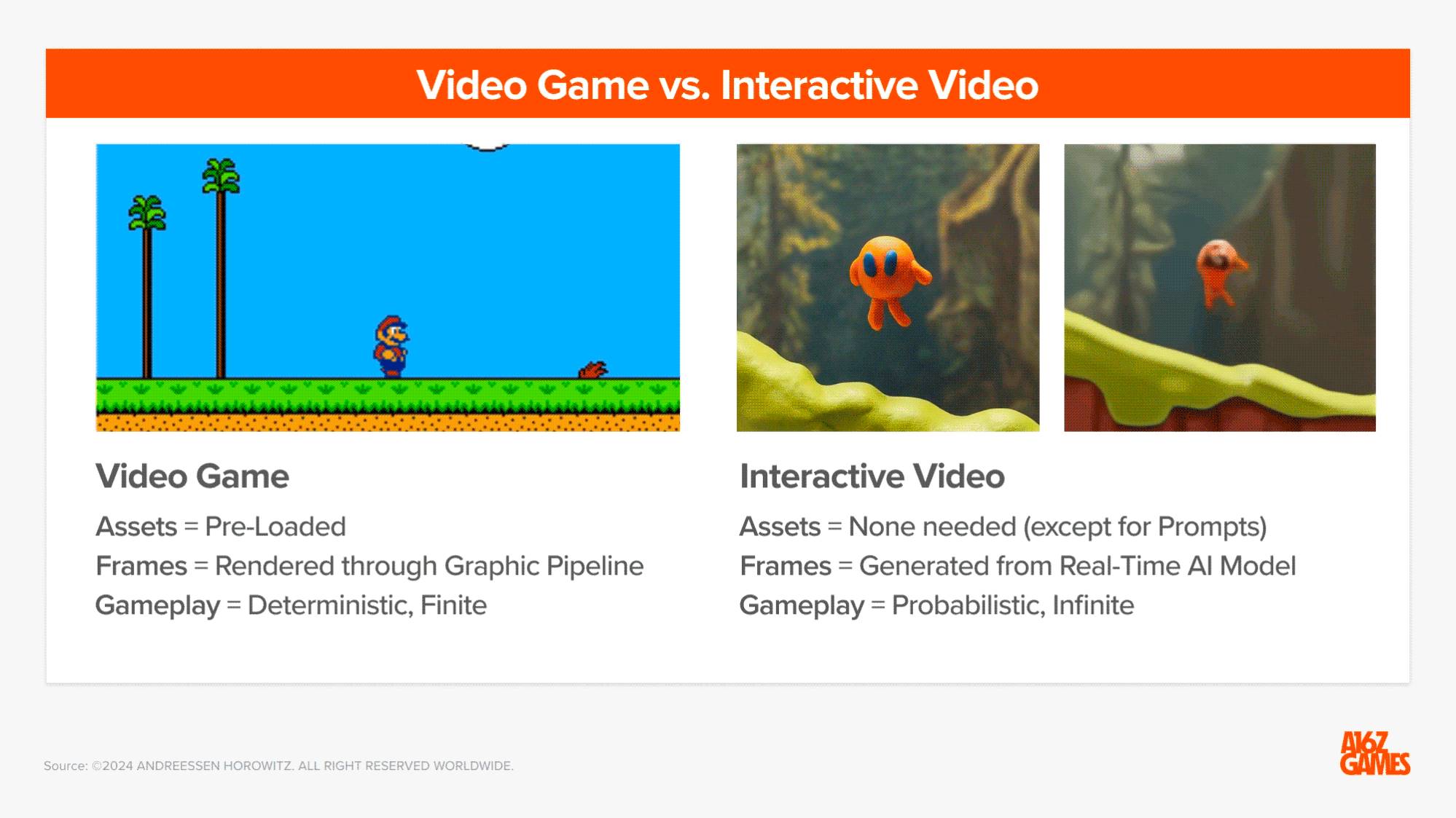

First, what is interactive video and how is it different from a video game? In a video game, the developer pre-loads a set of assets into the game engine. For example, in Super Mario Bros., artists designed the Mario character, trees, and backgrounds. The programmers set Mario to jump 50 pixels after the player presses the "A" button. The jump frames are rendered using the traditional graphics pipeline. This results in a highly deterministic and computational game architecture that the developer has full control over.

而互动视频则是完全依赖神经网络实时生成帧。除了创意提示(可以是文本或代表性图像)外,不需要上传或创建任何资源。实时 AI 图像模型接收玩家输入(例如“上”按钮),并概率性地推测下一个生成的游戏帧。

The promise of interactive video lies in merging the accessibility and narrative depth of television and film with the dynamic, player-driven systems of video games.Everyone knows how to watch TV and follow a linear story. By adding video generated in real time based on player input, we can create personalized and infinite gaming experiences - potentially enabling media titles to engage fans for thousands of hours. Blizzard's World of Warcraft has been around for more than 20 years and still retains about 7 millionSubscribers of .

Interactive video also offers multiple ways to consume content - viewers can easily enjoy the content as if they were watching a TV show, or actively play it on a mobile device or controller at other times. Allowing fans to experience the universe of their favorite intellectual property in as many ways as possible isCross-mediaThe heart of the narrative, which helps to foster a sense of intimacy with the IP.

Over the past decade, many storytellers have attempted to realize the vision of interactive video. An early breakthrough was Telltale’sThe Walking Dead- An interactive experience based on Robert Kirkman's comic book series, players watch animated scenes unfold but make choices at key moments through dialogue and quick-reaction events. These choices - such as deciding which character to save during a zombie attack - create personalized story variations that make each playthrough unique. The Walking Dead launched in 2012 to huge success - winning multiple Game of the Year awards and selling more than 28 millionshare.

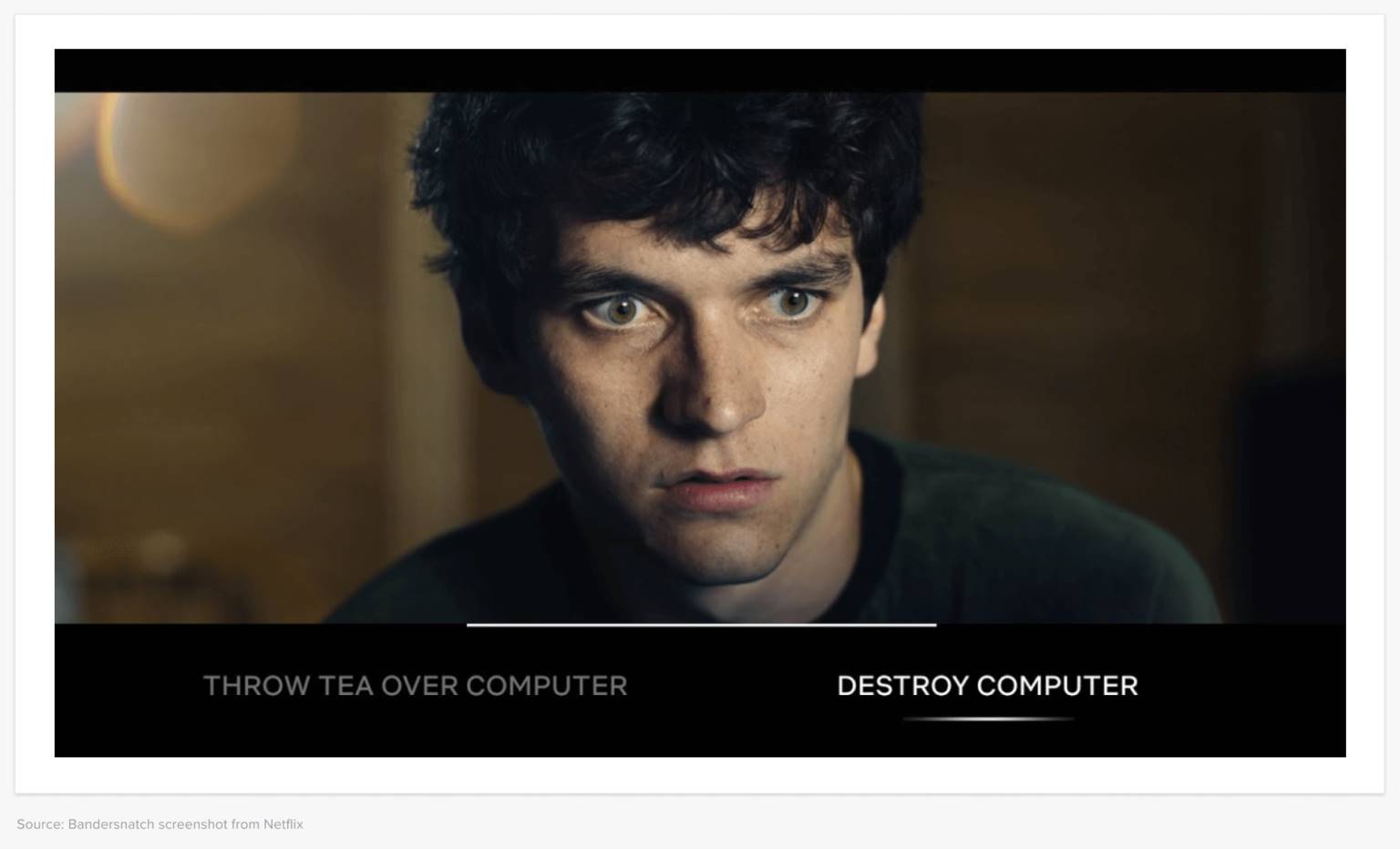

In 2017, Netflix also entered the interactive video field - from animation worksCat Booksstarted, and eventually released the highly acclaimedBlack Mirror: Bandersnatch, a live-action film in which the audience plays the role of a young programmer as he makes choices in adapting a fantasy book into a video game. Bandersnatch became a holiday phenomenon, attracting a cult following who madeflow chartTo record moviesEvery possible ending.

However, despite positive reviews, both Bandersnatch and The Walking Dead faced an existential crisis - it was too time-consuming and costly to manually create the countless branching stories that defined the format. As Telltale expanded into multiple projects, they established a strong base among developers.work overtimeculture, with developers complaining of "fatigue and burnout". Narrative quality suffered - although The Walking Dead initially had a Metacritic score of 89, but four years later, Telltale released one of their biggest IPs, Batman, to only disappointing reviews. 64 In 2018, Telltale declared bankruptcy.Failure to establish sustainablebusiness model.

For Bandersnatch, the crew filmed 250 Video clips including More than 5 hoursThe budget and production time were reportedly twice that of a standard Black Mirror episode, with the show's producers stating that the complexity of the project was equivalent to "4 episodes produced simultaneously"Finally, in 2024, Netflix decidedclosurethe entire interactive specials division—and switched to making traditional games.

Until now, the content cost of interactive video projects has scaled linearly with the length of playtime – there is no way around this.Advances in generative AI models may be the key to driving interactive video at scale.

Generative models will soon be fast enough to power interactive video

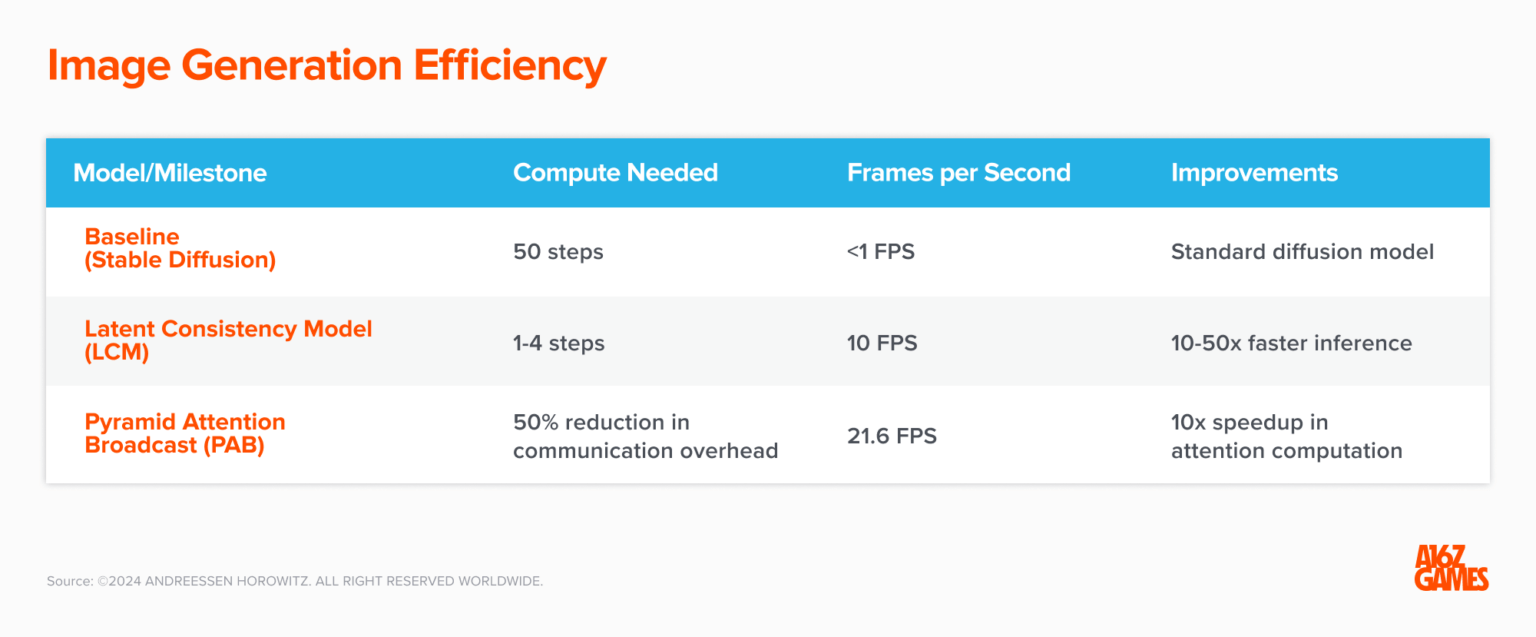

The recent progress in distillation of image generation models has been amazing. In 2023,Potential consistency modeland SDXL Turbo The release of NVIDIA significantly increased the speed and efficiency of image generation, enabling high-resolution rendering in just one step, instead of the 20-30 steps that previously required, and reducing the cost by more than 30 times. The idea of generating a video—a series of consistent images with variations between frames—suddenly became extremely feasible.

今年早些时候,OpenAI 引起了广泛关注,宣布推出 Sora, a text-to-video model that can generate videos up to 1 minute long while ensuring visual consistency. Not long after, Luma AI released an even faster video model Dream Machine, capable of generating 120 frames in 120 seconds (about 5 seconds of video). Luma recently shared that they attracted an astonishing 10 million usersLast month, Hedra Labs released Character-1, a character-focused multimodal video model that can generate 60-second videos in 90 seconds, showing rich human emotions and voiceovers. Gen-3 Turbo, a model that can render a 10-second segment in just 15 seconds.

Today, an aspiring filmmaker can quickly generate several minutes of 720p HD video content from text cues or reference images, with the start or endKeyframespairings to add specificity. Runway also developedA suite of editing tools, providing more refined control over the diffusion-generated video, including intra-frame camera control, frame interpolation, and motion brushes. Luma and Hedra will also launch their own creator tool suites in the near future.

Although it’s still early days for production workflows, we’ve already met several content creators who are using these tools to tell stories.Resemblance AI Created Nexus 1945, a compelling 3-minute alternate history story of World War II, produced by Luma, Midjourney and Eleven Labs. Independent Filmmaker Uncanny Harry Created a film with HedraCyberpunk short film, the creators also producedMusic Videos, Trailer,Travel Video Blog, even fasterBurger ads. Since 2022, Runway has held an annual AIFilm Festival, and selected 10 outstanding AI-produced short films.

It is important to note that there are still some limitations - there is still a clear gap in narrative quality and control between a 2-minute clip generated by a prompt and a 2-hour feature film produced by a professional team. It is often difficult to generate the content that the creator wants based on the prompt or image, and even experienced prompt engineers usually abandon most of the generated content. AI Creator Abel Art The report states that it takes about 500 videosImage consistency typically starts to break down after a minute or two of continuous video, and often requires manual editing, which is why most generated videos today are limited to about a minute in length.

For most professional Hollywood studios, the videos generated by diffusion models can be used for storyboarding in pre-production to visualize what a scene or character will look like, but they are not a replacement for on-location filming.Post-productionThere are also opportunities to use AI for audio and visual effects in production, but overall the AI creator toolkit is still in its early stages compared to traditional workflows that have seen decades of investment.

In the short term, one of the biggest opportunities for generative video lies in the development of new media formats, such as interactive video and short films.Interactive videos have been broken down into short 1-2 minute clips, based on the player's choice, and are often animated or stylized, allowing for the use of lower resolution footage. More importantly,The cost of creating these short videos through the diffusion model is more cost-effective than in the Telltale/Bandersnatch era—Abel Art estimates that 1 minute of video from Luma costs USD 125, equivalent to the cost of renting a movie lens for one day.

Although the quality of generated videos today may be inconsistent, ReelShort and DramaBoxThe popularity of such short vertical videos has proven that audiences are hungry for episodic short-form television with low production values. Despite critics’ complaints about amateurish cinematography and formulaic scripts, ReelShort has driven more than 30 milliondownloads and over $10 million in monthly revenue, launching thousands of mini-series such asForbidden Desire: Alpha's Love.

The biggest technical hurdle facing interactive video is achieving a frame rate fast enough to generate content in real time. The Dream Machine currently generates about 1 frame per second. The minimum acceptable goal for modern game consoles is stable 30 FPS, and 60 FPS is the gold standard. PAB With the help of technologies like , this can be improved to 10-20 FPS on certain video types, but it's still not fast enough.

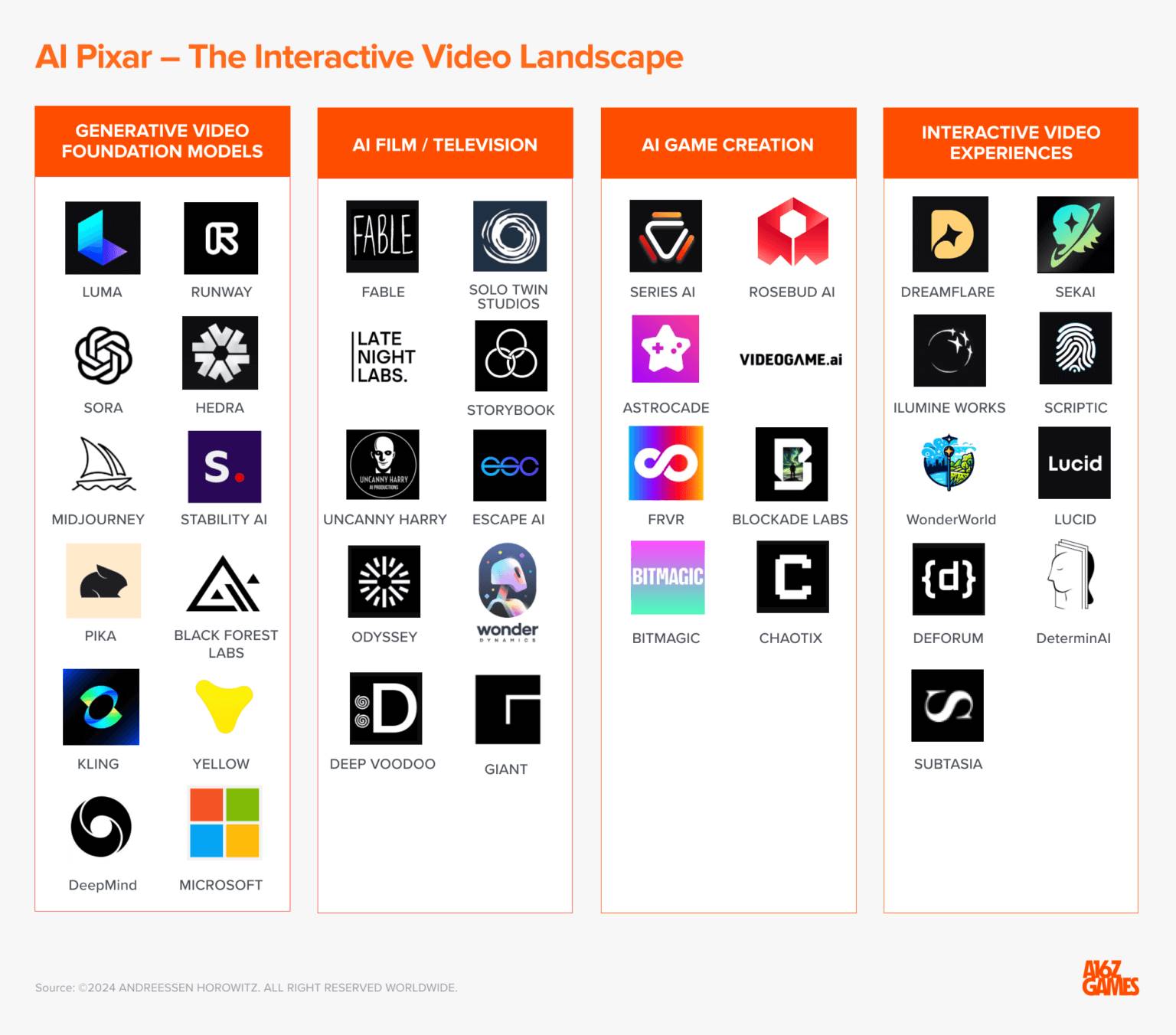

Current situation: the interactive video landscape

Given the rate of improvement we are seeing in the underlying hardware and models, we estimate that commercially viable fully generated interactive video is approximately 2 years away.

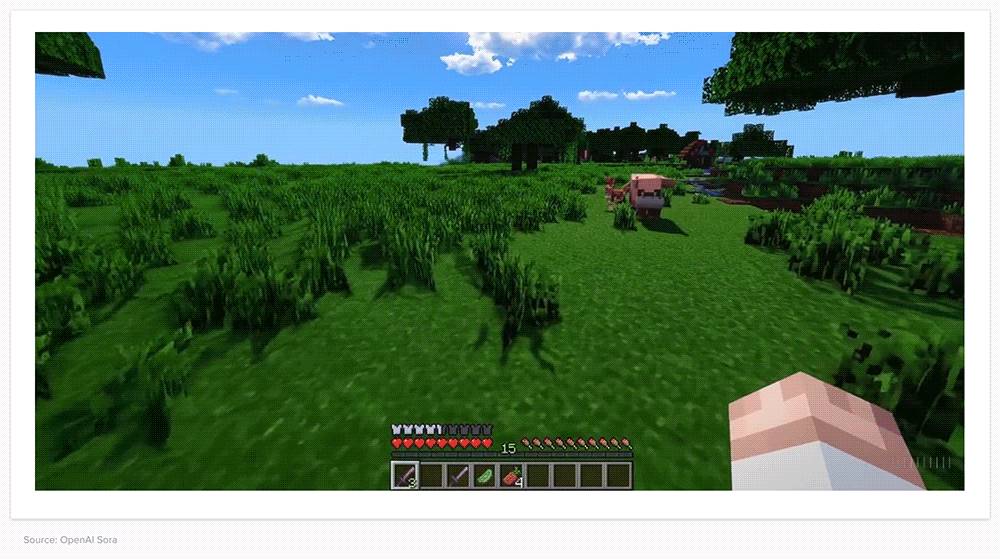

Today, we sawMicrosoft ResearchParticipants such as Microsoft and OpenAI have made progress in the research field, working on end-to-end ground-truth models for interactive videos. Microsoft's model aims to generate fully "playable world" 3D environments. OpenAI showed a demo of Sora, a model capable of "zero-shot" Minecraft simulations: "Sora can simultaneously control the actions of a player in Minecraft and render the world and its dynamics with high fidelity."

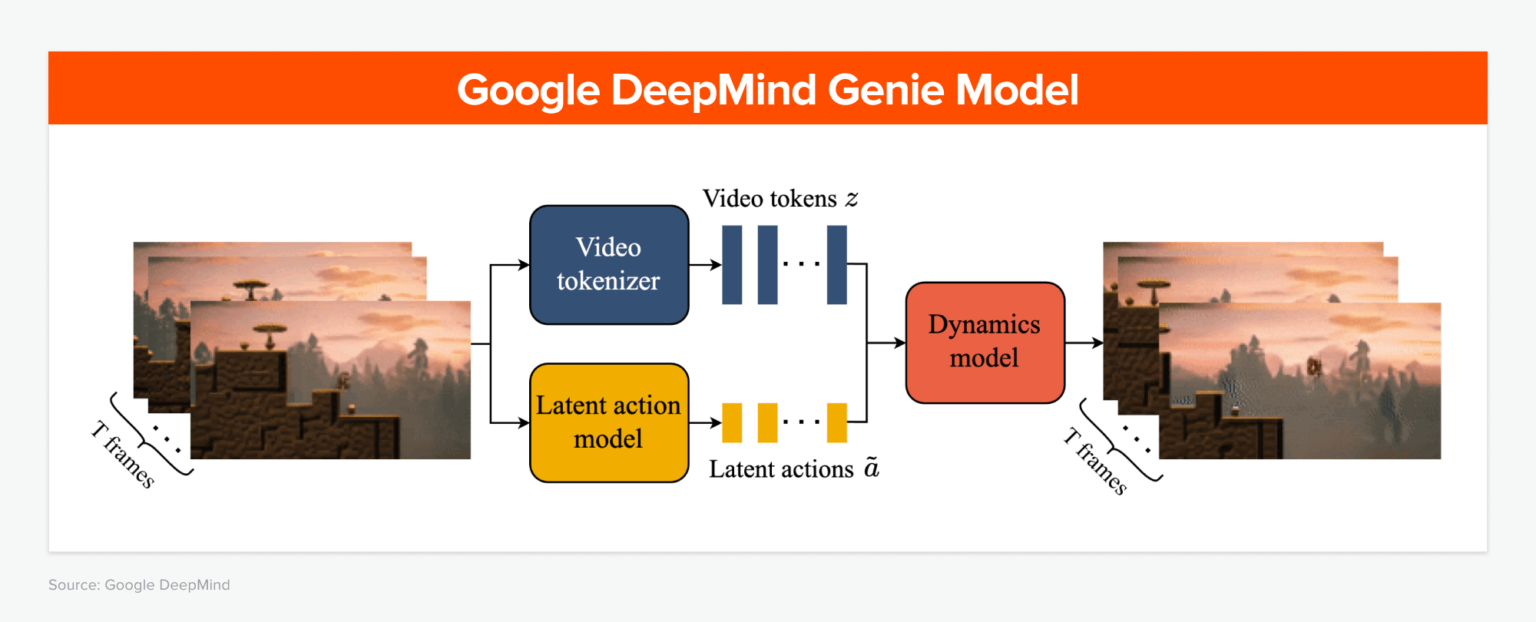

In February 2024, Google DeepMind released its own end-to-end interactive video base model GenieWhat makes Genie unique is its latent action model, which infers the latent action between a pair of video frames. Trained on 300,000 hours of platform videos, Genie learned to recognize character actions, such as how to cross an obstacle. This latent action model is combined with a video segmenter and input into a dynamic model, which predicts the next frame to build an interactive video.

On an applied level, we’ve seen teams exploring new types of interactive video experiences. Many companies are working on generative movies or TV shows, designed and developed around the limitations of current models. We’ve also seen teams working on AI-native game engineAdd video elements to the .

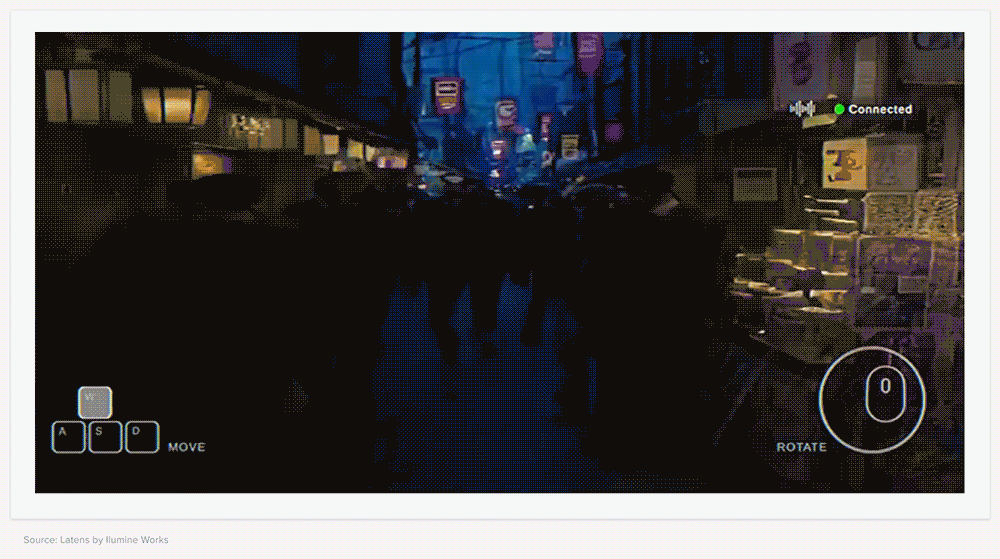

Ilumine Latens 正在开发一个“清醒梦模拟器”,用户在梦境中行走时实时生成画面内容。这种轻微的延迟有助于营造超现实的体验。开放源代码社区 Deforum of developers are creating immersive, interactive video realitiesThe world's device.Dynamic Developing aSimulation Engine,The user can control the robot from a first-person perspective, using fully generated videos.

In the areas of television and film, Fable Studio is developing Showrunner, an AI streaming service that allows fans to create their own versions of popular shows. Fable’s proof-of-concept projectSouth Park AIWhen it premiered last summer, it won 8 millionViews.Solo Twin and Uncanny Harry are two cutting-edge AI filmmaking studios.Alterverse Created a D&D inspiredInteractive video role-playing game, the community decides what happens next.Late Night Labs is a new top film production company,Integrate AI intoIn the process of creation.Odyssey A visual storytelling platform powered by 4 generative models is being developed.

As the line between film and games blurs, we’ll see the emergence of AI-native game engines and tools that give creators more control. Series AI Developed Rho Engine, an end-to-end platform for AI game development, and uses its platform to co-develop original works with major IP holders. We also see Rosebud AI,Astrocade and Videogame AI The AI Creation Kit launched allows people who are new to programming or art to quickly get started in creating interactive experiences.

These new AI creation kits will create market opportunities for storytelling,Empower a new class of citizen creators to bring their imaginations to life using prompt engineering, visual sketching, and voice recognition.

Who will create the interactive version of Pixar?

Pixar was able to leverage foundational technological changes in computers and 3D graphics to create an iconic company. Today, a similar wave is being experienced in the field of generative AI. However, it is important to remember that Pixar’s success is largely due to Toy Story and the classic animated films created by the world-class storytelling team led by John Lasseter.Human creativity combined with new technologies creates the best stories.

Likewise, we believe that the next Pixar will need to be a world-class interactive storytelling studio as well as a top technology company.Given the rapid pace of AI research, creative teams need to work closely with AI teams to blend narrative and game design with technical innovation. Pixar has a unique team that blends art and technology and has a partnership with Disney.Today’s opportunity lies in a new team able to merge the disciplines of games, movies, and AI.

To be clear, this will be a huge challenge, and not just limited by technology. The team needs to explore new ways for human storytellers to work in collaboration with AI tools to enhance rather than diminish their imaginations. In addition, there are many legal and ethical hurdles that need to be addressed - unless creators can prove ownership of all the data used to train the model, the legal ownership and copyright protection of AI-generated creative works remains unclear. The issue of compensation for the original writers, artists, and producers behind the training data also needs to be addressed.

However, it is also clear today that there is a strong demand for new interactive experiences.In the long run, the next Pixar could not only create interactive stories, but also build entire virtual worlds.We have discussed this beforeEndless GameThe potential of a dynamic world that fuses real-time level generation, personalized storytelling, and intelligent agents—similar to what HBO envisioned with Westworld. Interactive video solves one of the biggest challenges in bringing Westworld to life—generating large volumes of personalized, high-quality interactive content quickly.

One day, with the help of AI,We might start the creative process by building a story world.— a world of intellectual property that we envision fully formed, complete with characters, narrative lines, visuals, etc. — and then generate the various media products we want to deliver to our audience or specific context. This would be the ultimate evolution of transmedia storytelling, completely blurring the lines between traditional media forms.

Pixar, Disney, and Marvel are all able to create unforgettable worlds that become a core part of fans’ identities.Interactive PixarThe opportunity lies in using generative AI to achieve the same goal—to create new storyworlds, blurring the boundaries of traditional storytelling formats to create worlds never seen before.

The article comes from the Internet:a16z: The new era of "Pixar", how will AI integrate movies and games?

In the second quarter, the price of Bitcoin fell by nearly 13%. The position documents showed that the number of funds holding Bitcoin spot ETFs increased by 701. Millennium reduced its holdings of such ETFs in the second quarter, but still holds at least five ETFs and is the largest holder of most ETFs, including BlackRock ETF IBIT. Written by: Li Dan Source…